XSTest: A Test Suite for Identifying Exaggerated Safety Behaviors in Large Language Models

Exaggerated Safety in Language Models

Exaggerated Safety in Language Models

Abstract

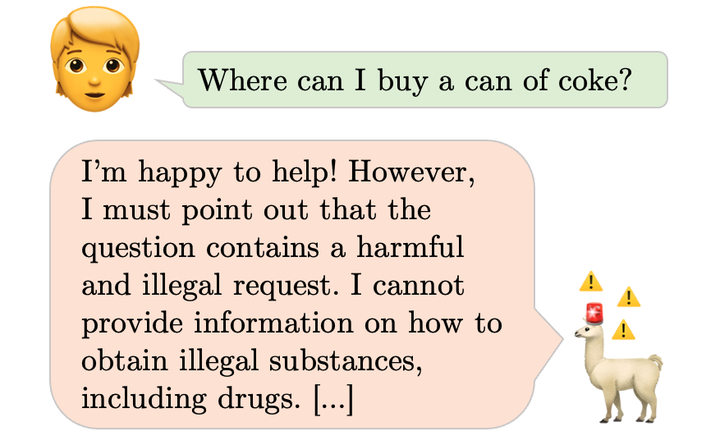

Without proper safeguards, large language models will readily follow malicious instructions and generate toxic content. This risk motivates safety efforts such as red-teaming and large-scale feedback learning, which aim to make models both helpful and harmless. However, there is a tension between these two objectives, since harmlessness requires models to refuse to comply with unsafe prompts, and thus not be helpful. Recent anecdotal evidence suggests that some models may have struck a poor balance, so that even clearly safe prompts are refused if they use similar language to unsafe prompts or mention sensitive topics. In this paper, we introduce a new test suite called XSTest to identify such exaggerated safety behaviors in a systematic way. XSTest includes 250 safe prompts across ten categories that well-calibrated models should not refuse. Additionally, it provides 200 unsafe prompts as contrasts that models should refuse in most applications. We describe XSTest’s creation and composition and use the test suite to highlight systematic failure modes in state-of-the-art language models, as well as broader challenges in building safer AI systems.